Project: FLIF

https://github.com/FLIF-hub/FLIF

“

FLIF is a lossless image format based on MANIAC compression. MANIAC (Meta-Adaptive Near-zero Integer Arithmetic Coding) is a variant of CABAC (context-adaptive binary arithmetic coding), where the contexts are nodes of decision trees which are dynamically learned at encode time.

FLIF outperforms PNG, FFV1, lossless WebP, lossless BPG and lossless JPEG2000 in terms of compression ratio.

Moreover, FLIF supports a form of progressive interlacing (essentially a generalization/improvement of PNG’s Adam7) which means that any prefix (e.g. partial download) of a compressed file can be used as a reasonable lossy encoding of the entire image.

For more information on FLIF, visit https://flif.info

“

To profile the project, I decided to write a script to store the run time.

I used Linux system calls record the run time of the program. To control the variables that may affect the run time and to get a more accurate time, I run the program 5 times and get the average run time of the 5 as the run time of the program.

To build a benchmark test case that is able to take 4 minutes, I created a 4k, 8k, and 16k picture as the test samples.

The result of 8k image on Xerxes server:

./flif test8k.png out.flif

Run 1/5 time: 112.959

Run 2/5 time: 113.265

Run 3/5 time: 113.794

Run 4/5 time: 112.956

Run 5/5 time: 113.011

Avg: 113.197

Then I need to run the test using the sample that I created on different optimization level. Therefore, fixing the test script is needed.

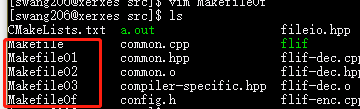

First, I created 4 different Makefile to allow my script to build the program with different optimization level:

Then in my script, after every test case, I just clean and compile it again, then run the test case.

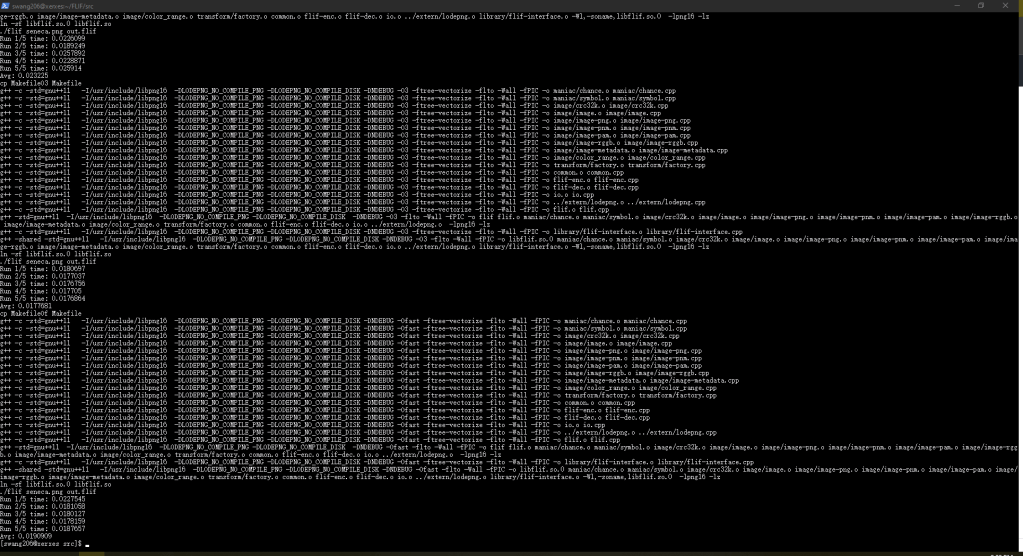

To test the script, I selected a small image as a sample.

Seems like it works well. Then I just need to modify the script to keep only the output that I need – the times and some other critical information.

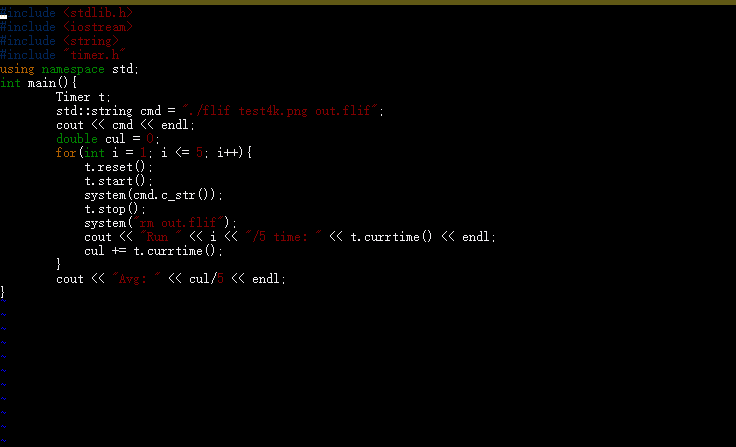

The script code is:

#include <stdlib.h>

#include <iostream>

#include <string>

#include "timer.h"

using namespace std;

int main(){

Timer t;

system("make clean > /dev/null"); //useless output

std::string cmd = "./flif seneca.png out.flif";

int c = 0;

test:

string builds[4] = {string("cp MakefileO1 Makefile"), string("cp MakefileO2 Makefile"), string("cp MakefileO3 Makefile"), string("cp MakefileOf Makefile")};

cout << builds[c] << endl;

system((string(builds[c]) + string(" > /dev/null")).c_str()); //useless output

system("make all >> buildinfo.txt");//record the build info into a file to log the building procedure

cout << cmd << endl;

double cul = 0;

for(int i = 1; i <= 5; i++){

t.reset();

t.start();

system(cmd.c_str());

t.stop();

system("rm out.flif");

cout << "Run " << i << "/5 time: " << t.currtime() << endl;

cul += t.currtime();

}

cout << "Avg: " << cul/5 << endl;

system("make clean > /dev/null"); //useless output

c++;

if(c != 4) goto test;

}

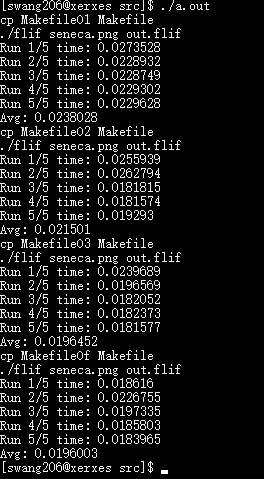

output:

Finally, I just need to use the correct sample image and redirect the output into a file, then I can do others staff.

./swtest.out > 8k_result.txtThis blog will be updated when I get the result on both Xerxes server(x86) and Israel(AArch86).

Result on Xerxes(8K Image):

O1:

Run 1/5 time: 136.447

Run 2/5 time: 136.021

Run 3/5 time: 136.356

Run 4/5 time: 136.181

Run 5/5 time: 136.28

Avg: 136.257

O2:

Run 1/5 time: 113.303

Run 2/5 time: 113.05

Run 3/5 time: 113.058

Run 4/5 time: 113.007

Run 5/5 time: 113.434

Avg: 113.17

O3:

Run 1/5 time: 115.2

Run 2/5 time: 115.248

Run 3/5 time: 115.254

Run 4/5 time: 115.537

Run 5/5 time: 115.56

Ofast

Run 1/5 time: 114.524

Run 2/5 time: 114.434

Run 3/5 time: 114.959

Run 4/5 time: 114.584

Run 5/5 time: 114.871

Avg: 114.675

Result on Israel:

O1:

Run 1/5 time: 480.112

Run 2/5 time: 482.835

Run 3/5 time: 487.211

Run 4/5 time: 481.755

Run 5/5 time: 486.157

Avg: 483.614

O2:

Run 1/5 time: 342.601

Run 2/5 time: 343.368

Run 3/5 time: 343.536

Run 4/5 time: 343.573

Run 5/5 time: 350.155

Avg: 344.647

O3:

Run 1/5 time: 354.89

Run 2/5 time: 358.898

Run 3/5 time: 359.501

Run 4/5 time: 354.217

Run 5/5 time: 349.274

Avg: 355.356

Ofast:

Run 1/5 time: 351.706

Run 2/5 time: 350.837

Run 3/5 time: 345.919

Run 4/5 time: 350.003

Run 5/5 time: 346.357

Avg: 348.964

According to the result, the optimization level affects the runtime of the program.

Usually, the O3 is the fastest optimization level, but in this testing case, the O2 and O3 does not have big differences.